Năm 2024 sẽ mở ra một loạt tiến bộ đáng sợ trong lĩnh vực trí tuệ nhân tạo như tạo ra mầm bệnh, robot sát thủ và thậm chí cả những dữ liệu giả nhưng ‘thật’ hơn cả thực tế.

Phần lớn những đổi mới trong trí tuệ nhân tạo (AI) giúp cải thiện cuộc sống, như giúp chẩn đoán y tế và khám phá khoa học tốt hơn.

Nhưng cạnh đó cũng có những AI gây nguy hiểm như máy bay không người lái sát thủ đến AI đe dọa tương lai của nhân loại.

Trang Live Science chọn ra một số đột phá AI đáng sợ nhất có thể xảy ra vào năm 2024.

Trí tuệ nhân tạo tổng hợp (AGI)

Không ai biết chính xác tại sao giám đốc điều hành OpenAI, Sam Altman, lại bị sa thải và được phục chức vào cuối năm 2023. Nhưng giữa sự hỗn loạn tại OpenAI, rộ tin xoay quanh một công nghệ tiên tiến có thể đe dọa tương lai của nhân loại.

Hãng Reuters đưa tin công nghệ đó được gọi là Q* (phát âm là Q-star) có khả năng hiện thực hóa mang tính đột phá của trí tuệ nhân tạo tổng hợp (AGI).

AGI là một điểm bùng phát giả định, còn được gọi là “Điểm kỳ dị”, trong đó AI trở nên thông minh hơn con người.

Các thế hệ AI hiện tại vẫn tụt hậu trong các lĩnh vực mà con người vượt trội, chẳng hạn như lý luận dựa trên ngữ cảnh và khả năng sáng tạo thực sự. Hầu hết nội dung do AI tạo ra chỉ lấy lại dữ liệu được đào tạo.

Nhưng các nhà khoa học cho biết AGI có khả năng thực hiện những công việc cụ thể tốt hơn con người. Nó cũng có thể được vũ khí hóa và sử dụng để tạo ra mầm bệnh, khởi động các cuộc tấn công mạng quy mô lớn hoặc dàn dựng, thao túng hàng loạt mạng.

Việc OpenAI đạt đến điểm bùng phát này chắc chắn sẽ là một cú sốc, nhưng không phải là không có khả năng. Ví dụ Sam Altman đã đặt nền móng cho AGI vào tháng 2-2023, phác thảo cách tiếp cận của OpenAI đối với AGI trong một bài đăng trên blog.

Các chuyên gia bắt đầu dự đoán một bước đột phá sắp xảy ra. Giám đốc điều hành của Nvidia, Jensen Huang, vào tháng 11-2023 đã nói rằng AGI sẽ đạt được trong vòng 5 năm tới, trang Barrons đưa tin.

Liệu năm 2024 có phải là năm đột phá của AGI? Chỉ có thời gian mới trả lời được.

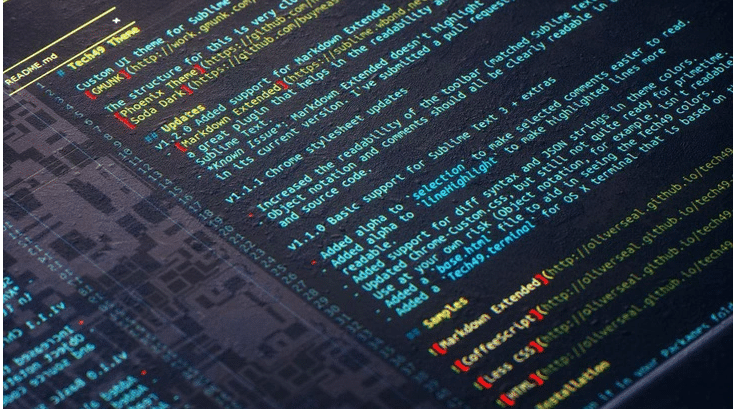

Deepfake siêu thực gian lận bầu cử

Một trong những mối đe dọa mạng cấp bách nhất là deepfake – những hình ảnh hoặc video hoàn toàn bịa đặt về những người họ muốn xuyên tạc, buộc tội hoặc bắt nạt.

Công nghệ deepfake AI vẫn chưa đủ tốt để trở thành mối đe dọa đáng kể, nhưng điều đó có thể sắp thay đổi.

AI giờ đây có thể tạo ra các tác phẩm giả mạo sâu trong thời gian thực – nói cách khác là nguồn cấp dữ liệu video trực tiếp – và giờ đây nó đang trở nên rất giỏi trong việc tạo ra khuôn mặt con người, đến mức mọi người không còn có thể phân biệt được đâu là thật hay giả nữa.

Một nghiên cứu khác, được công bố trên tạp chí Khoa học Tâm lý vào ngày 13-11, đã phát hiện ra hiện tượng “siêu thực”, trong đó nội dung do AI tạo ra có nhiều khả năng được coi là “thực” hơn nội dung thực tế.

Mặc dù các công cụ có thể giúp mọi người phát hiện các deepfake nhưng chúng vẫn chưa phổ biến và hoàn thiện.

Khi AI phát triển trưởng thành, một khả năng đáng sợ là mọi người có thể triển khai các tác phẩm sâu để cố gắng xoay chuyển các cuộc bầu cử tại các nước.

Phổ biến các robot sát thủ được hỗ trợ bởi AI

Các chính phủ trên khắp thế giới đang ngày càng kết hợp AI vào những công cụ phục vụ chiến tranh.

Chúng ta đã thấy máy bay không người lái AI đang săn lùng binh lính ở Libya mà không cần sự can thiệp của con người.

Vào năm 2024, có khả năng chúng ta sẽ không chỉ thấy AI được sử dụng trong các hệ thống vũ khí mà còn trong các hệ thống hậu cần và hỗ trợ quyết định, cũng như nghiên cứu và phát triển. Ví dụ, vào năm 2022 AI đã tạo ra 40.000 vũ khí hóa học giả định mới.

Theo trang tin NPR, Israel cũng đã sử dụng AI để nhanh chóng xác định mục tiêu nhanh hơn con người ít nhất 50 lần trong cuộc chiến mới nhất giữa Israel và Hamas.

Nhưng một trong những lĩnh vực phát triển đáng sợ nhất là hệ thống vũ khí tự động gây chết người (LAWS), hay robot sát thủ.

Một số diễn biến đáng lo ngại cho thấy năm 2024 có thể là năm đột phá đối với robot sát thủ. Ví dụ, ở Ukraine, Nga đã triển khai máy bay không người lái Zala KYB-UAV, loại máy bay này có thể nhận dạng và tấn công mục tiêu mà không cần sự can thiệp của con người, theo báo cáo từ Bản tin của các nhà khoa học nguyên tử.